The post Bridging Design and Runtime Gaps: AsyncAPI in Event-Driven Architecture appeared first on Linux.com.

]]>Various approaches exist for implementing asynchronous interactions and APIs, each tailored to specific use cases and requirements. Despite this diversity, these approaches fundamentally share a common baseline of key concepts. Whether it’s messaging queues, event-driven architectures, or other asynchronous paradigms, the overarching principles remain consistent.

Leveraging this shared foundation, AsyncAPI taps into a spectrum of techniques, providing developers with a unified understanding of essential concepts. This strategic approach not only fosters interoperability but also enhances flexibility across various asynchronous implementations, delivering significant benefits to developers.

From planning to execution: Design and runtime phases of EDA

The design time and runtime refer to distinct phases in the lifecycle of an event-driven system, each serving distinct purposes:

Design time: This phase occurs during the design and development of the event-driven system, where architects and developers plan and structure the system engaging in activities around:

- Designing event flows

- Schema definition

- Topic or channel design

- Error handling and retry policies

- Security considerations

- Versioning strategies

- Metadata management

- Testing and validation

- Documentation

- Collaboration and communication

- Performance considerations

- Monitoring and observability

The design phase yields assets, including a well-defined and configured messaging infrastructure. This encompasses components such as brokers, queues, topics/channels, schemas, and security settings, all tailored to meet specific requirements. The nature of these assets may vary based on the choice of the messaging system.

Runtime: This phase occurs when the system is in operation, actively processing events based on the design-time configurations and settings, responding to triggers in real time.

- Dynamic event routing

- Concurrency management

- Scalability adjustments

- Load balancing

- Distributed tracing

- Alerting and notification

- Adaptive scaling

- Monitoring and troubleshooting

- Integration with external systems

The output of this phase is the ongoing operation of the messaging platform, with messages being processed, routed, and delivered to subscribers based on the configured settings.

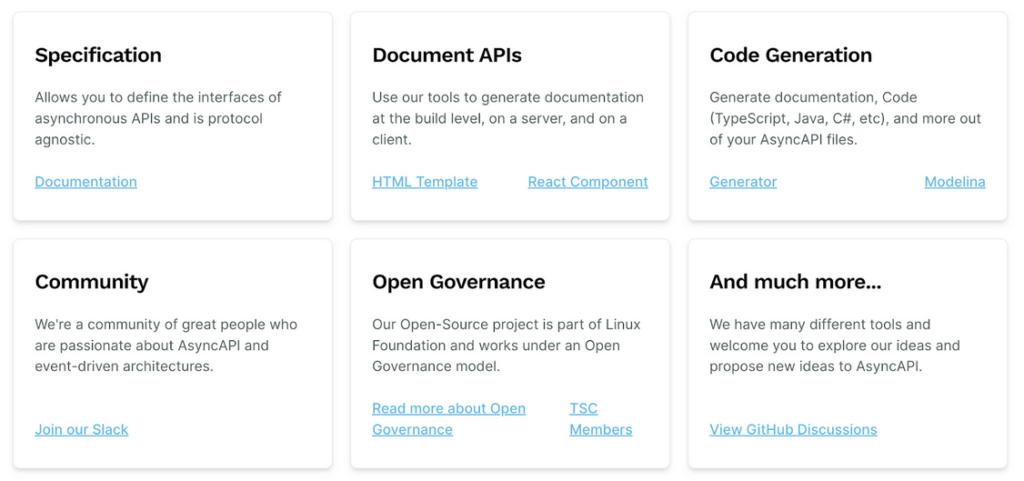

Role of AsyncAPI

AsyncAPI plays a pivotal role in the asynchronous API design and documentation. Its significance lies in standardization, providing a common and consistent framework for describing asynchronous APIs. AsyncAPI details crucial aspects such as message formats, channels, and protocols, enabling developers and stakeholders to understand and integrate with asynchronous systems effectively.

It should also be noted that the AsyncAPI specification serves as more than documentation; it becomes a communication contract, ensuring clarity and consistency in the exchange of messages between different components or services. Furthermore, AsyncAPI facilitates code generation, expediting the development process by offering a starting point for implementing components that adhere to the specified communication patterns.

In essence, AsyncAPI helps bridge the gap between design-time decisions and the practical implementation and operation of systems that rely on asynchronous communication.

Bridging the gap

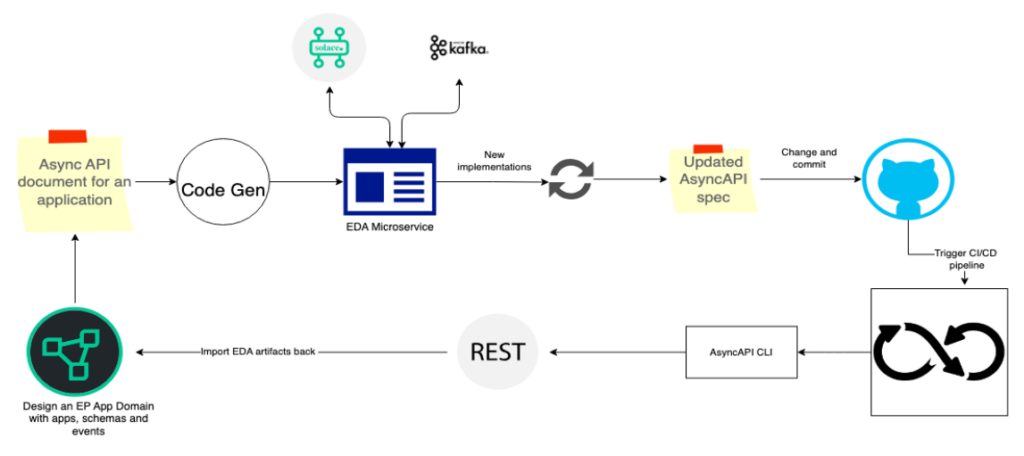

Let’s explore a scenario involving the development and consumption of an asynchronous API, coupled with a set of essential requirements:

- Designing an asynchronous API in an event-driven architecture (EDA):

- Define the events, schema, and publish/subscribe permissions of an EDA service

- Expose the service as an asynchronous API

- Generating AsyncAPI specification:

- Use the AsyncAPI standard to generate a specification of the asynchronous API

- Utilizing GitHub for storage and version control:

- Check in the AsyncAPI specification into GitHub, leveraging it as both a storage system and a version control system

- Configuring GitHub workflow for document review:

- Set up a GitHub action designed to review pull requests (PRs) related to changes in the AsyncAPI document

- If changes are detected, initiate a validation process

- Upon a successful review and PR approval, proceed to merge the changes

- Synchronize the updated API design with the design time

- Set up a GitHub action designed to review pull requests (PRs) related to changes in the AsyncAPI document

This workflow ensures that design-time and runtime components remain in sync consistently. The feasibility of this process is grounded in the use of the AsyncAPI for the API documentation. Additionally, the AsyncAPI tooling ecosystem supports validation and code generation that makes it possible to keep the design time and runtime in sync.

Putting the scenario into action

Let us consider Solace Event Portal as the tool for building an asynchronous API and Solace PubSub+ Broker as the messaging system.

An event portal is a cloud-based event management tool that helps in designing EDAs. In the design phase, the portal facilitates the creation and definition of messaging structures, channels, and event-driven contracts. Leveraging the capabilities of Solace Event Portal, we model the asynchronous API and share the crucial details, such as message formats, topics, and communication patterns, as an AsyncAPI document.

We can further enhance this process by providing REST APIs that allow for the dynamic updating of design-time assets, including events, schemas, and permissions. GitHub actions are employed to import AsyncAPI documents and trigger updates to the design-time assets.

The synchronization between design-time and runtime components is made possible by adopting AsyncAPI as the standard for documenting asynchronous APIs. The AsyncAPI tooling ecosystem, encompassing validation and code generation, plays a pivotal role in ensuring the seamless integration of changes. This workflow guarantees that any modifications to the AsyncAPI document efficiently translate into synchronized adjustments in both design-time and runtime aspects.

Conclusion

Keeping the design time and runtime in sync is essential for a seamless and effective development lifecycle. When the design specifications closely align with the implemented runtime components, it promotes consistency, reliability, and predictability in the functioning of the system.

The adoption of the AsyncAPI standard is instrumental in achieving a seamless integration between the design-time and runtime components of asynchronous APIs in EDAs. The use of AsyncAPI as the standard for documenting asynchronous APIs, along with its robust tooling ecosystem, ensures a cohesive development lifecycle.

The effectiveness of this approach extends beyond specific tools, offering a versatile and scalable solution for building and maintaining asynchronous APIs in diverse architectural environments.

Author

Post contributed by Giri Venkatesan, Solace

The post Bridging Design and Runtime Gaps: AsyncAPI in Event-Driven Architecture appeared first on Linux.com.

]]>The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

]]>

In 2021, The Linux Foundation continued to see organizations embrace open collaboration and open source principles, accelerating new innovations, approaches, and best practices. As a community, we made significant progress in the areas of cloud-native computing, 5G networking, software supply chain security, 3D gaming, and a host of new industry and social initiatives.

Download and read the report today.

The post Download the 2021 Linux Foundation Annual Report appeared first on Linux.com.

]]>The post How eBPF Streamlines the Service Mesh (TNS) appeared first on Linux.com.

]]>There are several service mesh products and projects today, promising simplified connectivity between application microservices, while at the same time offering additional capabilities like secured connections, observability, and traffic management. But as we’ve seen repeatedly over the last few years, the excitement about service mesh has been tempered by practical concerns about additional complexity and overhead. Let’s explore how eBPF allows us to streamline the service mesh, making the service mesh data plane more efficient and easier to deploy.

The post How eBPF Streamlines the Service Mesh (TNS) appeared first on Linux.com.

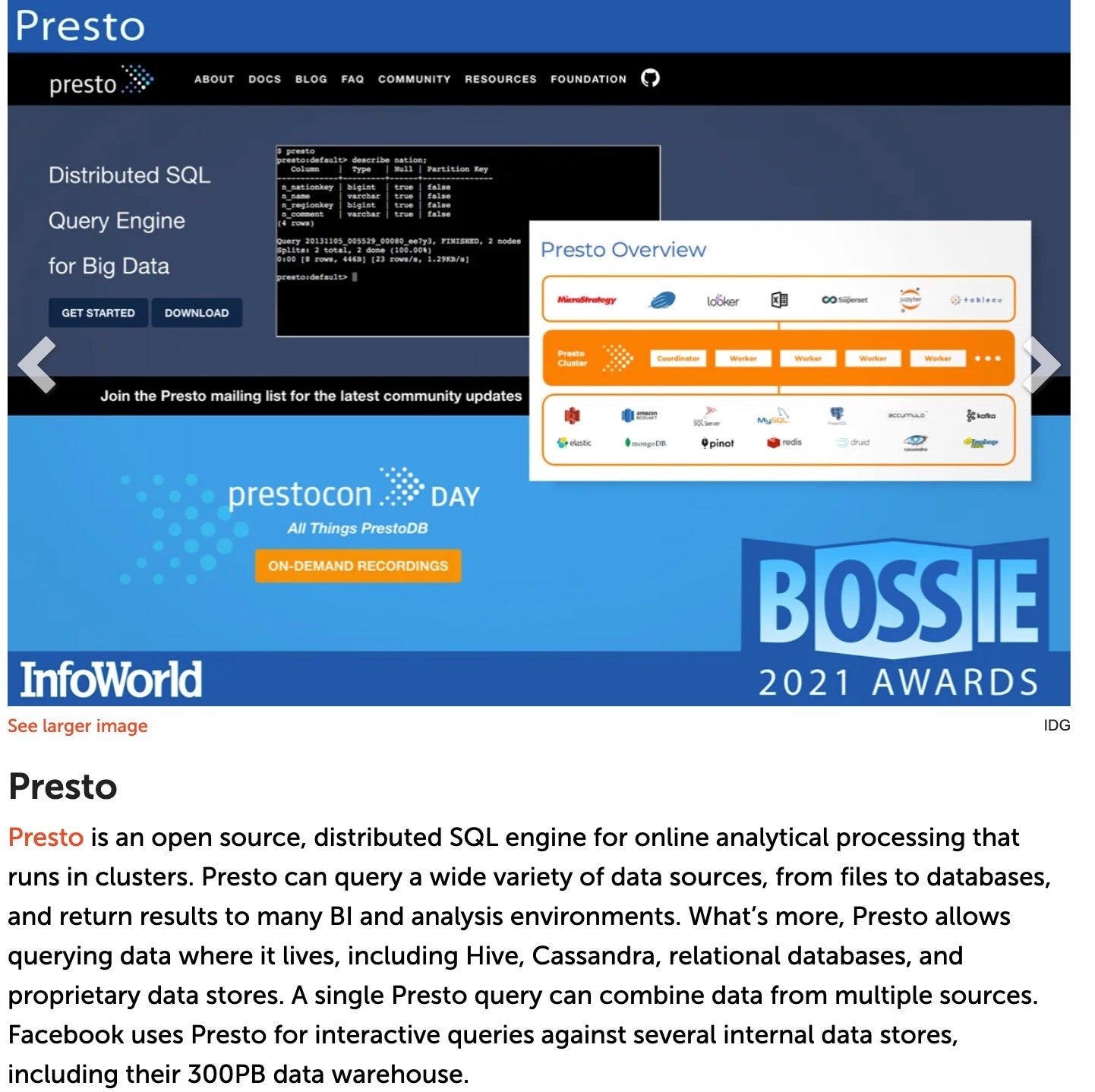

]]>The post PrestoDB is Recognized as Best Open Source Software of 2021 Bossie Awards (InfoWorld) appeared first on Linux.com.

]]>Read more about the 2021 Bossie Awards at InfoWorld.

The post PrestoDB is Recognized as Best Open Source Software of 2021 Bossie Awards (InfoWorld) appeared first on Linux.com.

]]>The post Open Source Block Storage for OpenNebula Clouds appeared first on Linux.com.

]]>The LINBIT OpenNebula TechDays is our mutual attempt to share our combined knowledge with the open source and storage community. We want to give you a thorough understanding of combining LINBIT’s software-defined storage solution with OpenNebula clouds.

OpenNebula is a powerful, but easy-to-use, open source platform to build and manage Enterprise Clouds. OpenNebula provides unified management of IT infrastructure and applications, avoiding vendor lock-in and reducing complexity, resource consumption, and operational costs. In contrast to OpenStack, which understands itself as a collection of independent projects, OpenNebula is an integrated solution that provides all the necessary components to manage a private, hybrid, or edge cloud.

LINBIT SDS is a software-defined storage solution for Linux that delivers highly-available, replicated block-storage volumes with exceptional performance. It matches perfectly with OpenNebula.

Both open source, both born in the Linux software ecosystem. LINBIT SDS is perfectly suited for a hyper-converged deployment with OpenNebula’s hypervisor nodes since it saves a lot on CPU and memory resources when you compare it to Ceph. At the same time, it delivers higher performance (IOPS and throughput) for single volumes and accumulated over all volumes of a cluster.

Find all webinars, case-studies, and discussions listed in the TechDays schedule. It is a free all-virtual event on April 20th and 21st, with direct access to LINBIT and OpenNebula experts.

See you there!

The post Open Source Block Storage for OpenNebula Clouds appeared first on Linux.com.

]]>The post SEAPATH: A Software Driven Open Source Project for the Energy Sector appeared first on Linux.com.

]]>The post SEAPATH: A Software Driven Open Source Project for the Energy Sector appeared first on Linux.com.

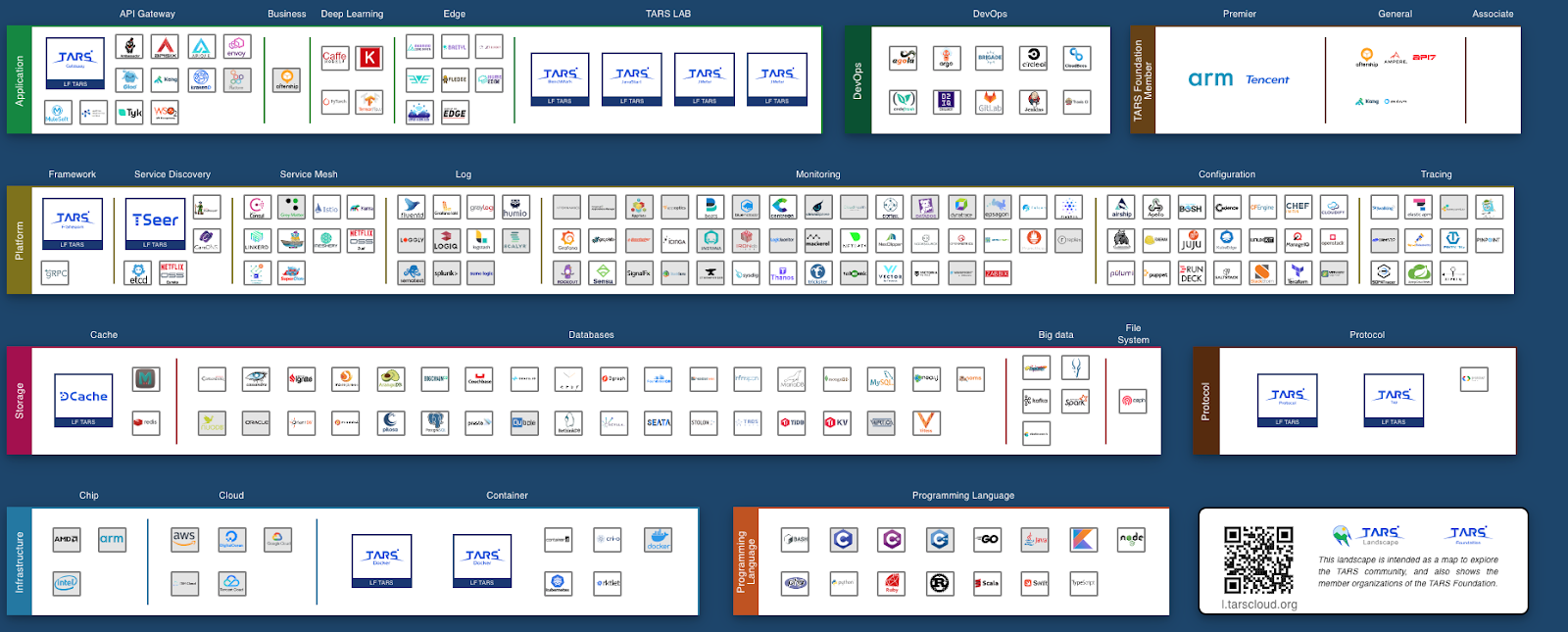

]]>The post The TARS Foundation Celebrates its First Anniversary appeared first on Linux.com.

]]>This year, four new projects have joined the TARS Foundation, expanding our technical community. The TARS Foundation launched TARS Landscape in July 2020, presenting an ideal and complete microservice ecosystem, which is the vision that the TARS open source community works to achieve. Furthermore, we welcome more open source projects to join the TARS community and go through our incubation process.

In September 2020, The Linux Foundation and TARS Foundation released a new, free training course, Building Microservice Platforms with TARS, on the edX platform. This course is designed for engineers working in microservices and enterprise managers interested in exploring internal technical architectures working for digital transmission in traditional industries. The course explains the functions, characteristics, and structure of the TARS microservices framework while demonstrating how to deploy and maintain services in different programming languages in the TARS Framework. Besides, anyone interested in software architecture will benefit from this course.

If you are interested in TARS training resources, please check out Building Microservice Platforms with TARS on edX.

Thanking our Members and Contributors

For more updates from TARS Foundation, please read our Annual Report 2020.

We would like to thank all our projects and project contributors. Thank you for your trust in the TARS Foundation. Without you and the value you bring to our entire community, our foundation would not exist.

We also want to thank our Governing Board, Technical Oversight Committee, Outreach Committee, and Community Advisor members! Every member has demonstrated their dedication and tireless efforts to ensure that the TARS Foundation is building a complete governance structure to push out a more comprehensive range of programs and make real progress. With the guidance of these passionate and wise leaders from our governing bodies, TARS Foundation is confident to become a neutral home for additional projects that solve critical problems surrounding microservices.

Thank you to all our members, Arm, Tencent, AfterShip, Ampere, API7, Kong, Zenlayer, and Nanjing University, for investing in the future of open source microservices. The TARS Foundation welcomes more companies and organizations to join our mission by becoming members.

Thank you to our end users! The TARS Foundation End User Community Plan was released to allow more companies to get involved with the TARS community. The purpose of the plan is to enable an open and free platform for communication and discussion about microservices technology and collaboration opportunities. Currently, the TARS Foundation has eight end-user companies, and we welcome more companies to join us as End Users.

What is next?

The TARS Foundation will continue to add more members and end-user companies in the next year while growing our shared resource pool for the benefit of our community. We will also look to include and incubate more projects, aiding our open source microservices ecosystem to empower any industry to turn ideas into applications at scale quickly. As part of our plan for next year, we aim to hold recurring meetup events worldwide and large-scale summits, creating a space for global developers to learn and exchange their ideas about microservices.

Words from our partners

Kevin Ryan, Senior Director, Arm

Through our collaboration with the TARS Foundation and Tencent, we’ve leveraged a significant opportunity to build and develop the microservices ecosystem,” said Kevin Ryan, senior director of Ecosystem, Automotive and IoT Line of Business, Arm. “We look forward to future growth across the TARS community as contributions, members, and momentum continue to accelerate.”

Mark Shan, Open Source Alliance Chair, Tencent

As TARS Foundation turns one year old, Tencent will continue to collaborate with partners and build an open and free microservices ecosystem in open source. By consistently upgrading microservices technology and cultivating the TARS community, we look forward to creating more innovations and making social progress through technology.

Teddy Chan, CEO & Co-Founder, AfterShip

Best wishes to the TARS Foundation for turning one year old and continuing its positive influence on microservices. AfterShip will fully support the future development of the Foundation!

Mauri Whalen, VP of Software Engineering, Ampere

Ampere has been partnering with the TARS Foundation to drive innovation for microservices. Ampere understands the importance of this technology and is committed to providing Ampere/Arm64 Platform support and a performance testing framework for building the open microservices community. We are excited the TARS Foundation has reached its first birthday milestone. Their project is driving needed innovation for modern cloud workloads.

Ming Wen, Co-founder, API7

Congratulations to the first anniversary of the TARS Foundation! With the wave of enterprise digital transformation, microservices have become the infrastructure for connecting critical traffic. The TARS Foundation has gathered several well-known open source projects related to microservices, including the APISIX-based open source microservice gateway provided by api7.ai. We believe that under the TARS Foundation’s efforts, microservices and the TARS Foundation will play an increasingly important role in digital transformation.

Marco Palladino, CTO and Co-Founder, Kong

In this new era driven by digital transformation 2.0, organizations around the world are transforming their applications to microservices to grow their customer base faster, enter new markets, and ship products faster. None of this would be possible without agile, distributed, and decoupled architectures that drive innovation, efficiency, and reliability in our digital strategy: in one word, microservices. Kong supports the TARS foundation to accelerate microservices adoption in both open source ecosystems and enterprise landscape, and to provide a modern connectivity fabric for all our services, across every cloud and platform.”, Marco Palladino, CTO and Co-Founder at Kong.

Jim Xu, Principal Engineer & Architect, Zenlayer

Microservices are the next big thing in the cloud as they enable fast development, scaling, and time-to-market of enterprise applications. TARS Foundation leads in building a strong ecosystem for open-source microservices, from the edge to the cloud. As a leading-edge cloud service provider, Zenlayer is committed to enabling microservices in multi-cloud and hybrid cloud scenarios in collaboration with the TARS Foundation community. As the TARS Foundation enters its second year, Zenlayer will continue to innovate in infrastructure, platforms, and labs to empower microservice implementation for enterprises of all kinds.

He Zhang, Professor, Nanjing University

We fully support the development of microservices and the mission to co-build a Cloud-native ecosystem. Embracing open source and community contribution, we believe the TARS Foundation is creating a future with endless possibilities ahead.

About the TARS Foundation

The TARS Foundation is a nonprofit, open source microservice foundation under the Linux Foundation umbrella to support the rapid growth of contributions and membership for a community focused on building an open microservices platform. It focuses on open source technology that helps businesses to embrace the microservices architecture as they innovate into new areas and scale their applications. For more information, please visit tarscloud.org.

The post The TARS Foundation Celebrates its First Anniversary appeared first on Linux.com.

]]>The post Here Is How To Create A Clean, Resilient Electrical Grid (Forbes) appeared first on Linux.com.

]]>One leading thinker in the Grid Evolution space, Dr. Shuli Goodman, believes that the success of Linux to transform the tech world can and should be applied to next-generation electrical grids.

Dr. Shuli Goodman, Executive Director of LF Energy

DR. SHULI GOODMAN

Dr. Goodman is the executive director of LF Energy, a young offshoot of the Linux Foundation (“LF”) that partners with prominent organizations to develop open-source software for utilities and grid operators to instantaneously understand and manage various new pools of energy supply (e.g. renewables, batteries, etc.). This software offers a single, common reference code base that all organizations can use as a base to build its own customized solutions. The advantage of the LF Energy approach is standardization and, more crucially, speed of implementation.

At this point, you may be asking the same question I asked Dr. Goodman: “Why do utilities and grid operators need software to run things anyway?”

The fact is that they never did. Back in the “good ole days” utilities were “communicating” with their customers in the same way someone with a megaphone communicates with an audience – shouting unidirectionally all the time. In this model, there is no room for complex multidirectional signals or need for software to manage the communication process.

Contrast that with the model that LF Energy is pioneering which, in our communication analogy, would be more similar to an Internet chat room than the old megaphone model. In an evolved, modern system, all parties are able to communicate bidirectionally in real-time with every other party.

The post Here Is How To Create A Clean, Resilient Electrical Grid (Forbes) appeared first on Linux.com.

]]>The post KubeEdge: Reliable Connectivity Between The Cloud & Edge appeared first on Linux.com.

]]>The post KubeEdge: Reliable Connectivity Between The Cloud & Edge appeared first on Linux.com.

]]>The post Unikraft: Pushing Unikernels into the Mainstream appeared first on Linux.com.

]]>Unikernels have been around for many years and are famous for providing excellent performance in boot times, throughput, and memory consumption, to name a few metrics [1]. Despite their apparent potential, unikernels have not yet seen a broad level of deployment due to three main drawbacks:

- Hard to build: Putting a unikernel image together typically requires expert, manual work that needs redoing for each application. Also, many unikernel projects are not, and don’t aim to be, POSIX compliant, and so significant porting effort is required to have standard applications and frameworks run on them.

- Hard to extract high performance: Unikernel projects don’t typically expose high-performance APIs; extracting high performance often requires expert knowledge and modifications to the code.

- Little or no tool ecosystem: Assuming you have an image to run, deploying it and managing it is often a manual operation. There is little integration with major DevOps or orchestration frameworks.

While not all unikernel projects suffer from all of these issues (e.g., some provide some level of POSIX compliance but the performance is lacking, others target a single programming language and so are relatively easy to build but their applicability is limited), we argue that no single project has been able to successfully address all of them, hindering any significant level of deployment. For the past three years, Unikraft (www.unikraft.org), a Linux Foundation project under the Xen Project’s auspices, has had the explicit aim to change this state of affairs to bring unikernels into the mainstream.

If you’re interested, read on, and please be sure to check out:

- The replay of our two FOSDEM talks [2,3] and the virtual stand

- Our website (unikraft.org) and source code (https://github.com/unikraft).

- Our upcoming source code release, 0.5 Tethys (more information at http://www.unikraft.org/release/)

- unikraft.io, for industrial partners interested in Unikraft PoCs (or info@unikraft.io)

High Performance

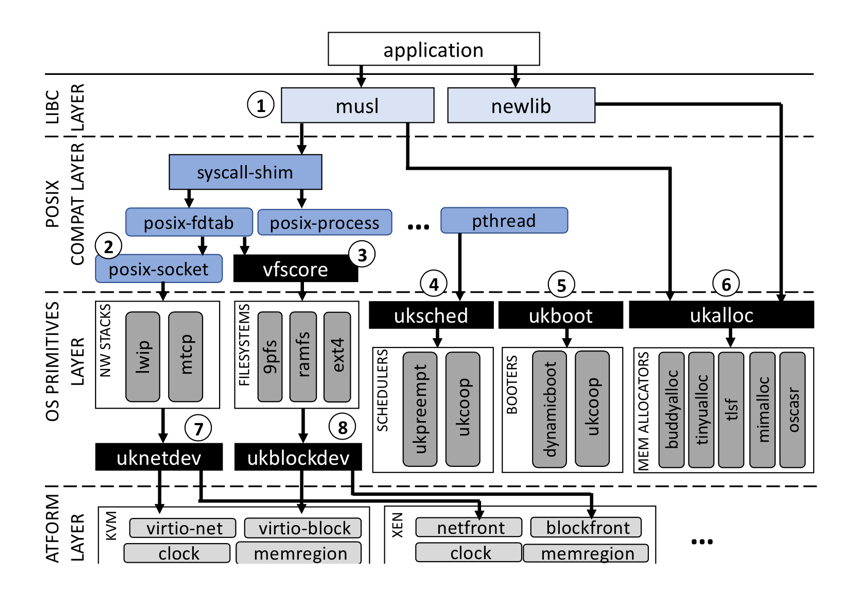

To provide developers with the ability to obtain high performance easily, Unikraft exposes a set of composable, performance-oriented APIs. The figure below shows Unikraft’s architecture: all components are libraries with their own Makefile and Kconfig configuration files, and so can be added to the unikernel build independently of each other.

Figure 1. Unikraft ‘s fully modular architecture showing high-performance APIs

APIs are also micro-libraries that can be easily enabled or disabled via a Kconfig menu; Unikraft unikernels can compose which APIs to choose to best cater to an application’s needs. For example, an RCP-style application might turn off the uksched API (➃ in the figure) to implement a high performance, run-to-completion event loop; similarly, an application developer can easily select an appropriate memory allocator (➅) to obtain maximum performance, or to use multiple different ones within the same unikernel (e.g., a simple, fast memory allocator for the boot code, and a standard one for the application itself).

|

|

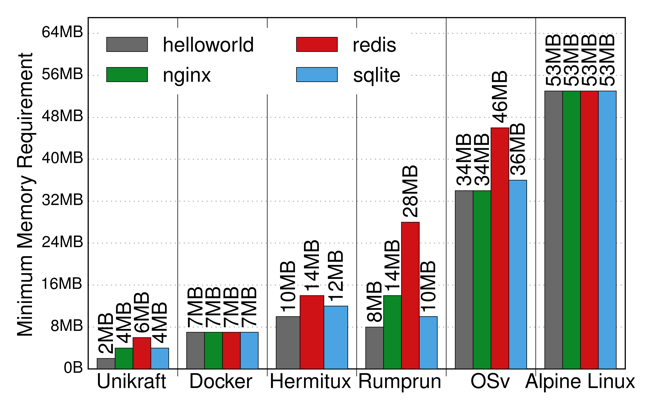

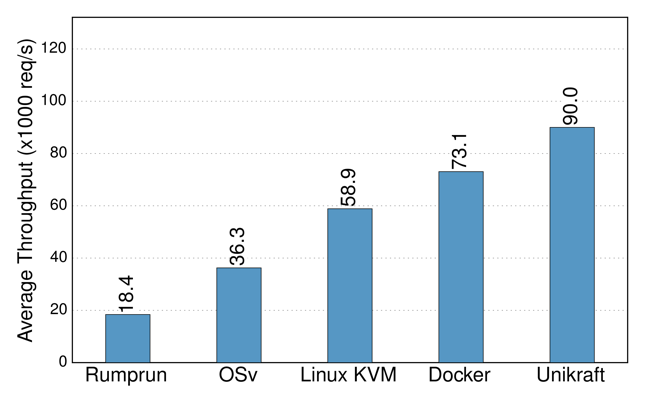

| Figure 2. Unikraft memory consumption vs. other unikernel projects and Linux | Figure 3. Unikraft NGINX throughput versus other unikernels, Docker, and Linux/KVM. |

These APIs, coupled with the fact that all Unikraft’s components are fully modular, results in high performance. Figure 2, for instance, shows Unikraft having lower memory consumption than other unikernel projects (HermiTux, Rump, OSv) and Linux (Alpine); and Figure 3 shows that Unikraft outperforms them in terms of NGINX requests per second, reaching 90K on a single CPU core.

Further, we are working on (1) a performance profiler tool to be able to quickly identify potential bottlenecks in Unikraft images and (2) a performance test tool that can automatically run a large set of performance experiments, varying different configuration options to figure out optimal configurations.

Ease of Use, No Porting Required

Forcing users to port applications to a unikernel to obtain high performance is a showstopper. Arguably, a system is only as good as the applications (or programming languages, frameworks, etc.) can run. Unikraft aims to achieve good POSIX compatibility; one way of doing so is supporting a libc (e.g., musl), along with a large set of Linux syscalls.

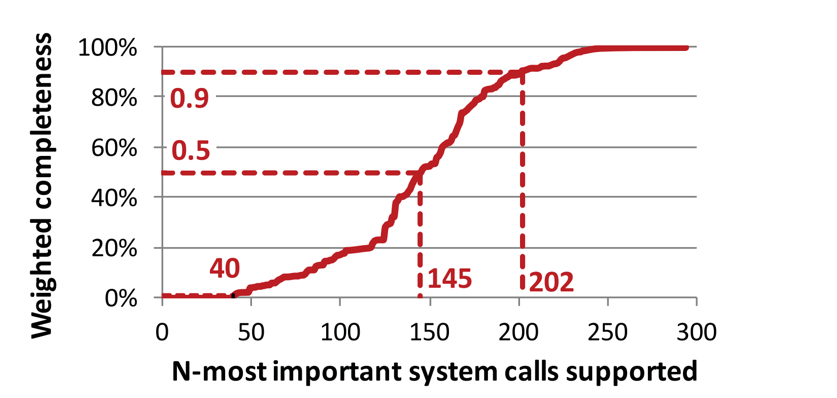

Figure 4. Only a certain percentage of syscalls are needed to support a wide range of applications

While there are over 300 of these, many of them are not needed to run a large set of applications; as shown in Figure 1 (taken from [5]). Having in the range of 145, for instance, is enough to support 50% of all libraries and applications in a Ubuntu distribution (many of which are irrelevant to unikernels, such as desktop applications). As of this writing, Unikraft supports over 130 syscalls and a number of mainstream applications (e.g., SQLite, Nginx, Redis), programming languages and runtime environments such as C/C++, Go, Python, Ruby, Web Assembly, and Lua, not to mention several different hypervisors (KVM, Xen, and Solo5) and ARM64 bare-metal support.

Ecosystem and DevOps

Another apparent downside of unikernel projects is the almost total lack of integration with existing, major DevOps and orchestration frameworks. Working towards the goal of integration, in the past year, we created the kraft tool, allowing users to choose an application and a target platform simply (e.g., KVM on x86_64) and take care of building the image running it.

Beyond this, we have several sub-projects ongoing to support in the coming months:

- Kubernetes: If you’re already using Kubernetes in your deployments, this work will allow you to deploy much leaner, fast Unikraft images transparently.

- Cloud Foundry: Similarly, users relying on Cloud Foundry will be able to generate Unikraft images through it, once again transparently.

- Prometheus: Unikernels are also notorious for having very primitive or no means for monitoring running instances. Unikraft is targeting Prometheus support to provide a wide range of monitoring capabilities.

In all, we believe Unikraft is getting closer to bridging the gap between unikernel promise and actual deployment. We are very excited about this year’s upcoming features and developments, so please feel free to drop us a line if you have any comments, questions, or suggestions at info@unikraft.io.

About the author: Dr. Felipe Huici is Chief Researcher, Systems and Machine Learning Group, NEC Laboratories Europe GmbH

References

[1] Unikernels Rethinking Cloud Infrastructure. http://unikernel.org/

[2] Is the Time Ripe for Unikernels to Become Mainstream with Unikraft? FOSDEM 2021 Microkernel developer room. https://fosdem.org/2021/schedule/event/microkernel_unikraft/

[3] Severely Debloating Cloud Images with Unikraft. FOSDEM 2021 Virtualization and IaaS developer room. https://fosdem.org/2021/schedule/event/vai_cloud_images_unikraft/

[4] Welcome to the Unikraft Stand! https://stands.fosdem.org/stands/unikraft/

[5] A study of modern Linux API usage and compatibility: what to support when you’re supporting. Eurosys 2016. https://dl.acm.org/doi/10.1145/2901318.2901341

The post Unikraft: Pushing Unikernels into the Mainstream appeared first on Linux.com.

]]>